According to its creators at the HazyResearch group at Stanford, Snorkel is a system for rapidly creating, modeling and managing training data. I first heard of it when attending Prof Christopher Ré's talk on his DeepDive project at the Data Science Summit at San Francisco almost 2 years ago. The DeepDive project has since morphed into a lighter-weight (and arguably more user friendly) project called Snorkel. Snorkel was under active development at the time, but since then the Snorkel team has simplified its API and added many features, including recently the ability to work with Apache Spark. They also provide many more case-studies with code than when I looked at it last, making it easier to get started with.

Snorkel is a system for data programming, described in the NIPS 2016 paper Data Programming: Creating Large Training Sets, Quickly by Ratner, De Sa, Wu, Selsam & Ré. It provides a set of labeling functions, which allows the user to quickly label large amounts of data in an unsupervised manner using regularities of language, a technique called weak supervision. For example, finding a word suffixed by "land" or "shire" in a sentence could be evidence for a labeling function to mark up the sentence as a GEOGRAPHICAL class. You could also distant supervision, where you use an ontology or dictionary to generate such labels based on matches in the sentence with phrases in the dictionary. Other techniques could be (non-expert) crowdsourcing or the use of unsupervised statistical methods. Multiple labels for the same data is generated in this way. These labels are plentiful, since they are generated programmatically, but generally noisy, i.e, they may conflict with each other.

Snorkel provides a generative model that will take these noisy labels and return a clean label per data point, i.e, the probability that the data point belongs to one of our classes given the distribution of the labels. This clean label can then be used as input to train a discriminative model such as a classifier.

In this post, I describe a little proof-of-concept that I built in order to understand the Snorkel API better. My example uses data from their Crowdsourced Sentiment Analysis using Snorkel example, and is an analog for a use case I have in mind, where these crowdsourced noisy labels are replaced by outputs from unsupervised (or weaker supervised) sources.

The data for the crowdsourced sentiment analysis example has two files - the first is a raw data file of 1,000 weather tweets, each annotated for "emotion" by 20 crowdsourced workers, for a total of 20,000 records. The second file contains 1,000 of these tweets that have been manually annotated (presumably by a domain expert on weather emotions) making this the gold set. The original example uses a subset of the gold data that have high confidence because they want to be able to evaluate the generated model -- since I wanted to evaluate the generated model also, I did the same. The example uses Snorkel on Spark, but since the data is not that large, I decided to use Pandas on my local machine.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | from scipy.sparse import csr_matrix

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report

from sklearn.model_selection import train_test_split

from snorkel import SnorkelSession

from snorkel.learning.gen_learning import GenerativeModel

import operator

import os

import numpy as np

import pandas as pd

DATA_DIR = "data"

RAW_FILE = os.path.join(DATA_DIR, "weather-non-agg-DFE.csv")

GOLD_FILE = os.path.join(DATA_DIR, "weather-evaluated-agg-DFE.csv")

# raw file to dataframe

raw_df = pd.read_csv(RAW_FILE)

# gold file to dataframe

gold_df = pd.read_csv(GOLD_FILE)

# select high confidence gold data

gold_df = gold_df[gold_df["correct_category"]=="Yes"]

gold_df = gold_df[gold_df["correct_category_conf"]== 1]

# get the crowdsourced "sentiment" from raw_df

candidate_df = raw_df.join(gold_df.set_index("tweet_id"),

how="inner", on="tweet_id", rsuffix="_r")

candidate_df = candidate_df.loc[:, ["tweet_id", "worker_id",

"emotion", "sentiment"]]

candidate_df.head()

|

The filtering to retain only high confidence gold data results in 632 records. Joining with the raw data results in 12,640 (20 * 632) records. We now transform this to the standard X and y matrices as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | # build lookup tables

values = candidate_df.emotion.unique().tolist()

idx2label = {(i+1):x for i, x in enumerate(values)}

label2idx = {x:(i+1) for i, x in enumerate(values)}

# reduce by tweet_id

labels, predictions = {}, {}

for row in candidate_df.itertuples():

noisy_label = label2idx[row.emotion]

pred_label = label2idx[row.sentiment]

if row.tweet_id in labels:

labels[row.tweet_id].append((row.worker_id, noisy_label))

predictions[row.tweet_id] = pred_label

else:

labels[row.tweet_id] = [(row.worker_id, noisy_label)]

# sort noisy labels for each tweet_id by worker_id

for tweet_id in labels.keys():

sorted_labels = sorted(labels[tweet_id], key=operator.itemgetter(0))

sorted_labels = [l for w, l in sorted_labels]

labels[tweet_id] = sorted_labels

num_features = len(labels[tweet_id])

num_tweets = len(labels)

X = np.zeros((num_tweets, num_features), dtype=np.int64)

y = np.zeros((num_tweets, 1), dtype=np.int64)

i = 0

for tweet_id in sorted(list(labels.keys())):

X[i] = np.array(labels[tweet_id], dtype=np.int64)

y[i] = predictions[tweet_id]

i += 1

Xtrain, Xtest, ytrain, ytest = train_test_split(X, y, train_size=0.7,

test_size=0.3)

|

The lookup tables convert the list of unique emotion/sentiment labels into equivalent numbers. There are 5 unique emotions -- "Neutral / author is just sharing information", "Tweet not related to weather condition", "Positive", "Negative", "I can't tell", and they map to the numbers [1, 2, 3, 4, 5]. Note the 1-based list -- for some reason, the 0-based list results in terrible accuracy numbers. You need to do a similar transformation when converting the predictions from the generative model back to these class IDs, but we will get to that later.

The code gives us an X matrix of shape (632, 20) and a y vector of labels (derived from the gold_df.sentiment column) of shape (632, 1). We will use the label vector only for evaluation. Splitting the X and y tensors into a 70/30 training/test set gives us Xtrain, ytrain, Xtest and ytest of shapes (442, 20), (442, 1), (190, 20), (190, 1) respectively.

As a baseline for our evaluation, we compute the statistics if we just considered the majority vote from the crowdsourced workers.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | def majority_preds(inputs):

preds = []

for i in range(inputs.shape[0]):

votes = inputs[i]

counts = np.bincount(votes)

preds.append(np.argmax(counts))

return np.array(preds, dtype=np.int64)

def report_results(title, preds, labels):

print("\n\n**** {:s} ****".format(title.upper()))

acc = accuracy_score(preds, labels)

cm = confusion_matrix(preds, labels)

cr = classification_report(preds, labels)

print("accuracy: {:.3f}".format(acc))

print("\nconfusion matrix")

print(cm)

print("\nclassification report")

print(cr)

preds_train = majority_preds(Xtrain)

report_results("train", preds_train, ytrain)

preds_test = majority_preds(Xtest)

report_results("test", preds_test, ytest)

|

|

|

|

|

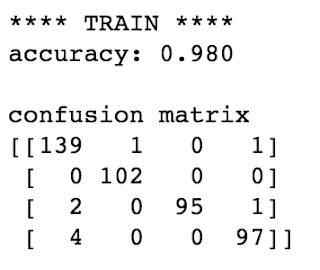

As you can see, the accuracies for majority voting are quite high, 0.98 for the training set and 1.0 for the test set.

Next, we define and train Snorkel's generative model and train it for 30 epochs. I specify the cardinality explicitly here, but it can figure it out by itself as well. The marginals are a set of probabilities of being in each of the 5 classes, their shapes are (442, 5) and (190, 5) respectively.

1 2 3 4 5 6 7 8 9 10 11 12 13 | session = SnorkelSession()

gen_model = GenerativeModel(lf_propensity=True)

gen_model.train(

Xtrain,

reg_type=2,

reg_param=0.1,

epochs=30,

cardinality=5

)

train_marginals = gen_model.marginals(csr_matrix(Xtrain))

test_marginals = gen_model.marginals(csr_matrix(Xtest))

|

Next we evaluate the generative model and see how it stacks up against our simple-minded majority vote model. The best label is just the class which has the maximum probability, but since we are trying to map against 1-based class IDs, we need to add one to the argmax value for the correct label.

1 2 3 4 5 | train_predictions = np.argmax(train_marginals, axis=1)+1

test_predictions = np.argmax(test_marginals, axis=1)+1

report_results("train (gen model)", train_predictions, ytrain)

report_results("test (gen model)", test_predictions, ytest)

|

|

|

|

|

Results for Snorkel's generative model don't seem to be as good as our majority vote model, but they are quite good nevertheless. The next step would be to try building a more realistic generative model using the full raw dataset and evaluate it against the full gold set, and then build a discriminative model to do the classification on that. I will talk more about this in my next post.

Obviously there is a lot more to Snorkel than I just covered. It is a complete package that provides most of the tools you are likely to need to do data programming. To learn more about Snorkel, check out the Snorkel website on github. If you have lots of raw data but are struggling to build large labeled datasets to feed your machine learning algorithms, I think you will find it well worth the effort.